Although Apple Intelligence isn’t the spotlight feature in iOS 26 this year, it still comes with some exciting updates—most notably Live Translation, now integrated directly into the Messages, Phone, and FaceTime apps. Additionally, Apple Intelligence is also expanding support for more languages.

If you’d like to learn more about the new features in iOS 26, don’t miss this post: What’s New in iOS 26 Beta 1?

More Language Support

Apple has announced that later this year, Apple Intelligence will support 8 additional languages, including:

- Danish

- Dutch

- Norwegian

- Portuguese (Portugal)

- Swedish

- Turkish

- Chinese (Traditional)

- Vietnamese

Live Translation

Available on iPhone, iPad, Mac and Apple Watch

Live Translation is now built into three core communication apps in iOS 26: Messages, Phone, and FaceTime. Apple is also releasing a Call Translation API that allows developers to bring this feature into their own apps.

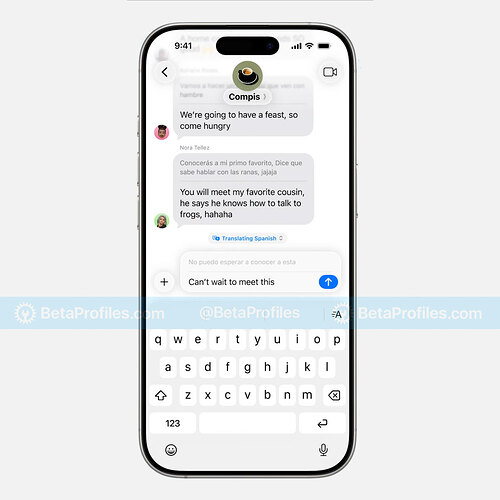

Messages

In the Messages app, Live Translation will automatically display translated text in the recipient’s language, making it easier to chat with someone who speaks a different language.

For example, if you message a friend in English and they speak Spanish, your message will be shown in both English and Spanish. If your friend also uses iOS 26, their Spanish replies will be auto-translated to English for you.

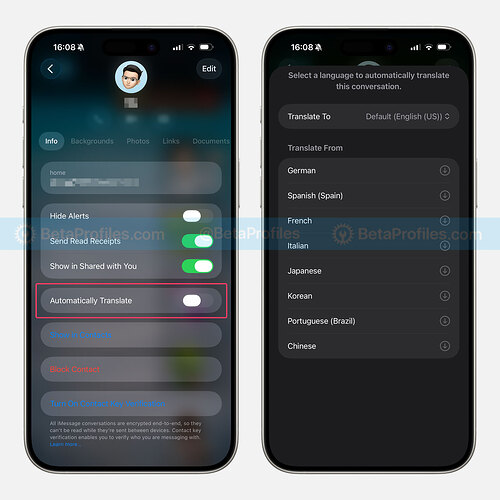

To enable Live Translation in a conversation, tap the recipient’s name at the top of the screen, turn on Automatically Translate, then choose the language you want to translate. It may take a moment to download the necessary language models.

Currently, Live Translation in Messages supports the following languages:

- English (U.S., UK)

- French (France)

- German

- Italian

- Japanese

- Korean

- Portuguese (Brazil)

- Spanish (Spain)

- Chinese (simplified)

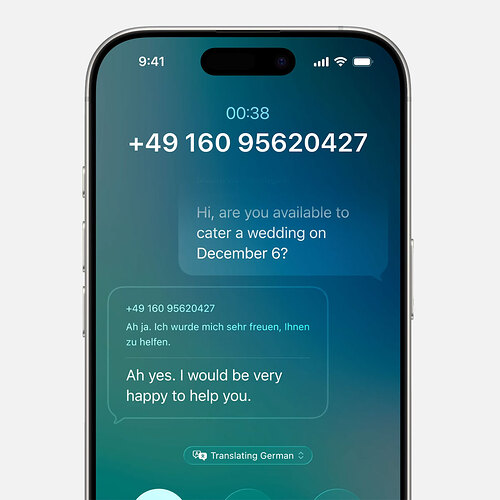

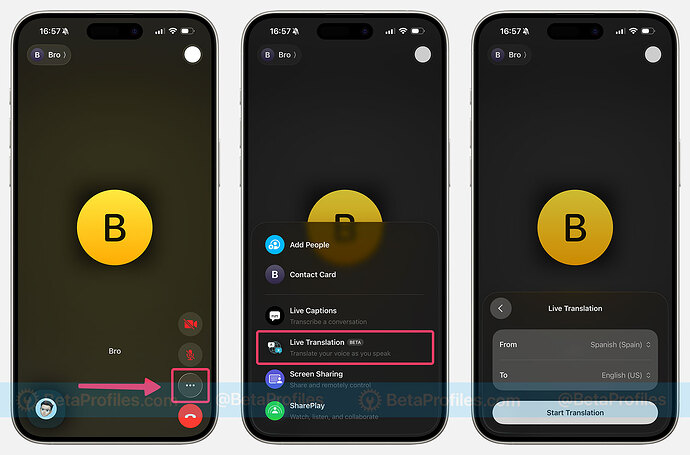

Phone

Just like in Messages, the Phone app now supports Live Translation for voice calls in foreign languages.

You can speak in your native language, and Live Translation will show a real-time translation in the other person’s language, along with an AI-generated voice. If they’re also using iOS 26, they’ll hear your words translated into their language as well.

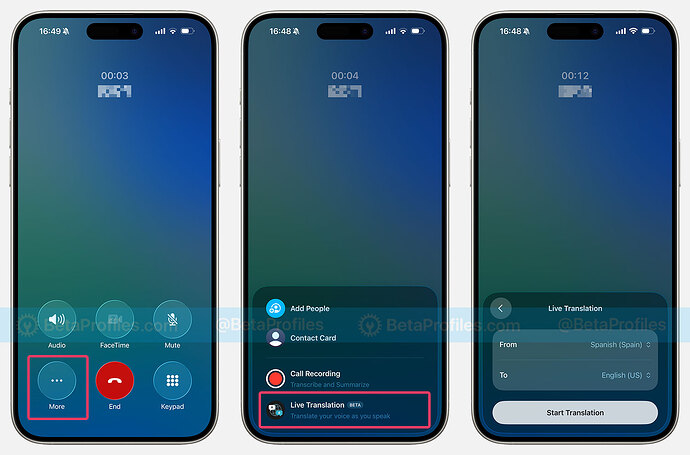

To enable Live Translation during a call, tap the More button after the other person picks up, tap Live Translation, choose the desired language, and tap Start Translation.

Supported languages in the Phone app currently include:

- English (U.S., UK)

- French (France)

- German

- Portuguese (Brazil)

- Spanish (Spain)

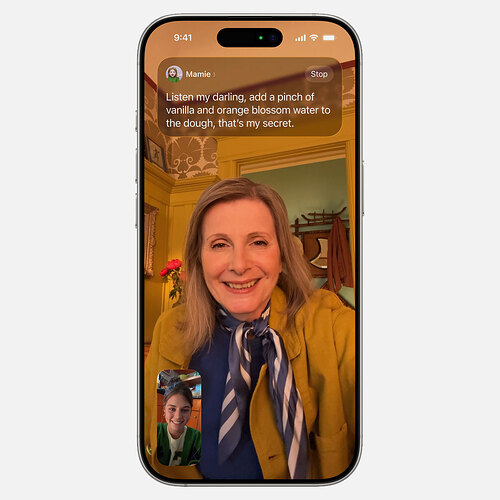

FaceTime

Live Translation in FaceTime works like subtitles in a movie. As the other person speaks in their language, you’ll see live subtitles translated into your language.

To enable this during a FaceTime call, tap the three dots in the bottom-right corner once the call is connected, tap Live Translation, choose your preferred language, then tap Start Translation.

Just like in Phone, supported languages currently include:

- English (U.S., UK)

- French (France)

- German

- Portuguese (Brazil)

- Spanish (Spain)

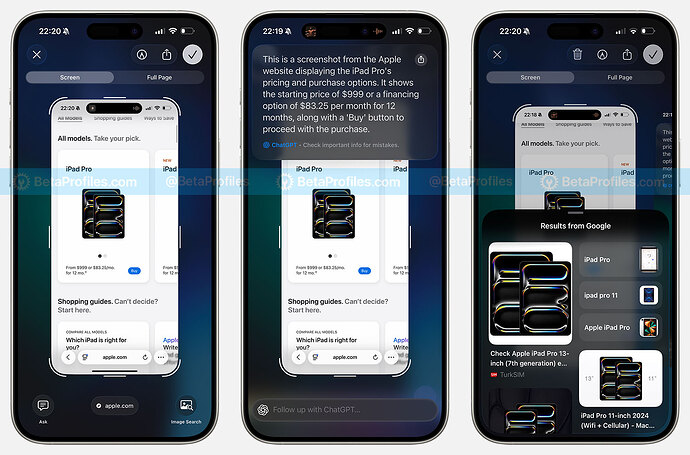

Visual Intelligence

Available on iPhone

Visual Intelligence is now integrated into screenshots in iOS 26 to help identify what’s on the screen using a Google-powered feature called Highlight to Search. You can also ask ChatGPT for more context or information.

To use Highlight to Search, press the Side Button and Volume Up simultaneously to take a screenshot. You can then circle a specific object (or tap the Image Search button) to search on Google. Apple says this feature will also suggest results from your frequently used apps, like Etsy, Pinterest, or others. It’s especially useful when you’re trying to look up something you just saw on your screen.

There’s also an Ask button in the screenshot UI that lets you ask ChatGPT about what’s on the screen.

If the screenshot contains recognizable information like an event, it can suggest adding it to your Calendar, this feature has already been available in iOS 18.4.

Visual Intelligence can now also recognize more object types, including art, books, landmarks, natural sites, and sculptures.

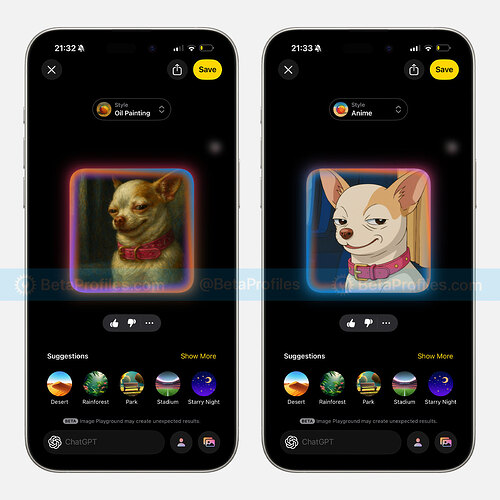

Image Playground

Available on iPhone, iPad, Mac and Vision Pro

Image Playground now includes several ChatGPT-inspired styles:

- Any Style

- Oil Painting

- Watercolor

- Vector

- Anime

You can mix and match these styles with your own prompts to generate creative images.

Genmoji

Available on iPhone, iPad, Mac and Vision Pro

Genmoji in iOS 26 allows you to combine two or more emojis to create a brand-new one, letting you express yourself in more personalized ways.

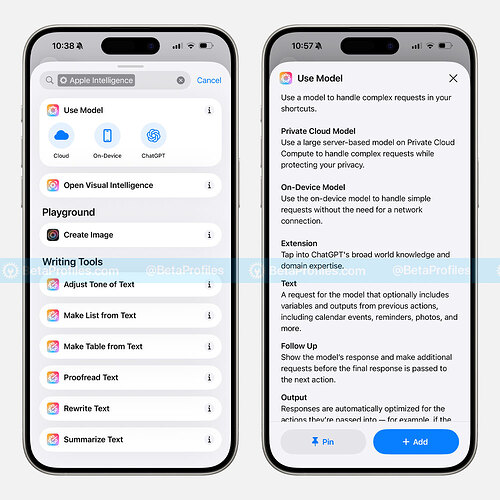

Shortcuts

Available on iPhone, iPad, and Mac

The Shortcuts app now supports a variety of new actions powered by Apple Intelligence, including:

- Use Model: Handles complex requests using one of three models — on-device, Private Cloud Compute, or ChatGPT.

- Open Visual Intelligence

- Create Image using Image Playground

- Writing Tools: Adjust Tone, Make List from Text, Make Table from Text, Proofread, Rewrite, and Summarize.

For example, you could build your own AI chatbot that runs on Private Cloud Compute, it works quite well, though it can sometimes respond slowly. You can always switch to a different model like ChatGPT if needed.

Here’s a download link for the “Ask AI” shortcut I created above, you can customize it to use any model you prefer.

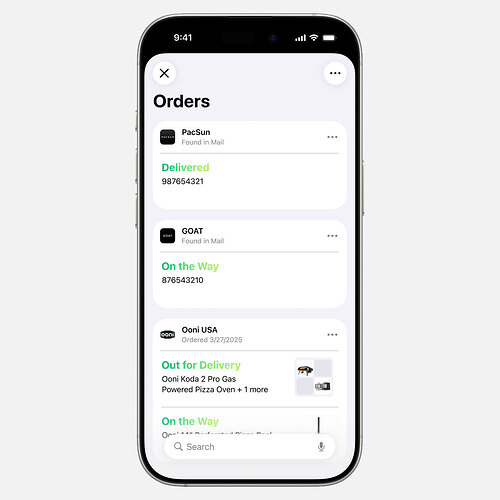

Apple Wallet

Available on iPhone

The Wallet app is now smarter, too. With Apple Intelligence, it can automatically scan your emails to track purchases and deliveries, even if you didn’t use Apple Pay. You’ll get summarized tracking details directly in Wallet.

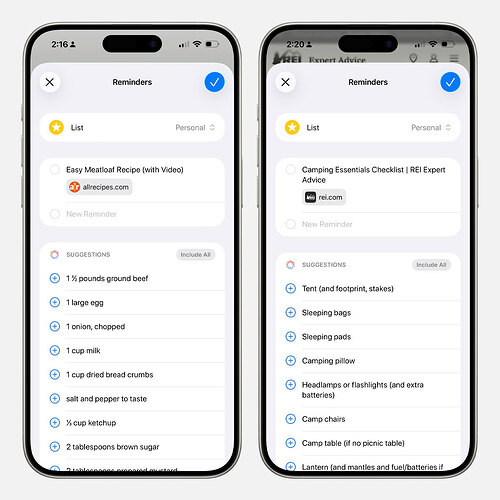

Reminder

Available on iPhone, iPad, and Mac

Apple Intelligence also boosts the Reminders app. It now understands the content you add and suggests relevant tasks.

For instance, if you share a recipe webpage to Reminders, it might suggest adding the ingredients to your shopping list, saving you time and effort.

Image credit u/freaktheclown

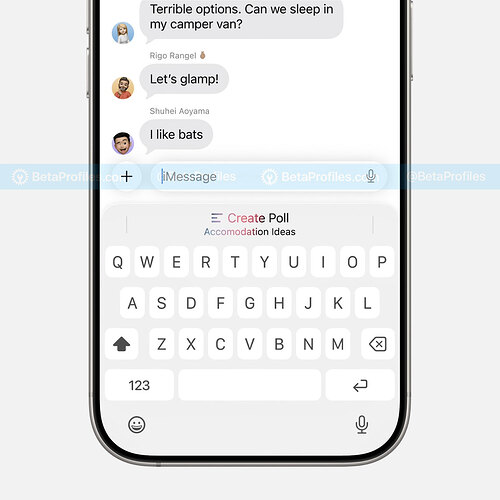

Messages

Available on iPhone, iPad, and Mac

The Messages app in iOS 26 now supports creating polls, but Apple Intelligence takes it a step further by suggesting a poll when it detects questions related to planning.

Additionally, Image Playground is integrated into Messages, allowing you to create custom chat backgrounds.

Workout Buddy

Available on Apple Watch with a paired iPhone

Workout Buddy uses an entirely new text-to-speech model to deliver personalized, encouraging messages based on voice data from Apple Fitness+ trainers and insights from your current workout and fitness history.

While Workout Buddy is available on Apple Watch, it requires proximity to an iPhone that supports Apple Intelligence and must be connected to Bluetooth headphones. Currently, it’s only available in English and supports the following popular workout types:

- Outdoor and Indoor Run

- Outdoor and Indoor Walk

- Outdoor Cycle

- HIIT

- Functional Strength Training

- Traditional Strength Training

Foundation Models Framework

Apple is also launching a Foundation Models Framework for developers. This allows third-party apps to tap into Apple Intelligence features like summarization, content generation, and text extraction, powered by system-level foundation models.

You can learn more about the Foundation Models Framework on the Apple Developer website.